Interactive perception

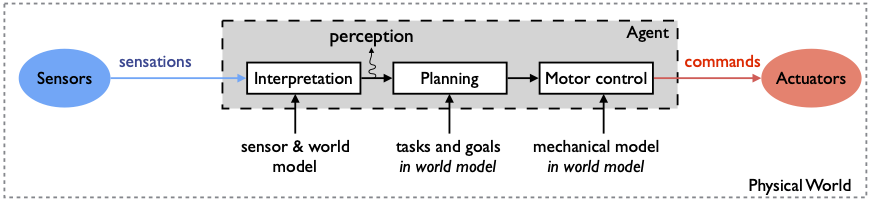

Most of the existing works dealing with robot perception (and this includes my own on robot audition!) rely on the traditional, somewhat historical, following scheme (a.k.a. the perceive/plan/act scheme):

- first, the robot reads and understands its sensations on the basis on a priori models given by the engineers (for instance, auditory, camera, laser, etc. models, trying to make the bridge between the raw sensors outputs and its high level interpretation)

- then, the system plan its action inside a generally well-known environment, whose characteristics are often identified beforehand

- finally, the action is actually performed by controlling the joints thanks to geometric/cinematic/dynamic models and controllers.

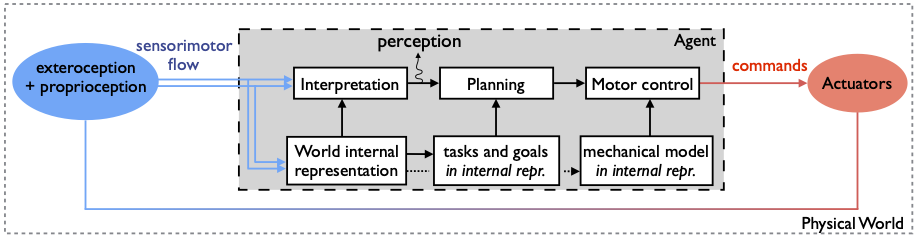

This very generic approach do work pretty well! As long as your models (of the robot, its sensors, the world, etc.) do fit pretty well the reality for the given task, quite amazing results can be obtained. But being able to deal with incomplete models (and they always are incomplete!), being able to face unpredictable situations where all your models can no longer apply is still a very difficult task. One solution could consist in questioning the aforementioned perception architecture. One could indeed envisage a new way to deal with perception where this ability is no longer somewhat given a priori by the designer of the system, but instead built and discovered by the robot, not from the raw sensory signals (i.e. the outputs od its sensors), but from the sensorimotor flow (i.e. the data made of the data coming from extero and/or proprioceptive sensors, and of the commands of the joints). Then, the previous architecture turns into

In this line of research, the question of the emergence of internal representation of the robot interaction in its own environment is a central issue. It is indeed only on the basis on these representations that the robot will be able to plan and act in its environment (and not on a priori models like in previous approaches). This question is absolutely fundamental when trying to build robot with autonomy and adaptability capabilities, which are mandatory capabilities in modern application of Robotics.

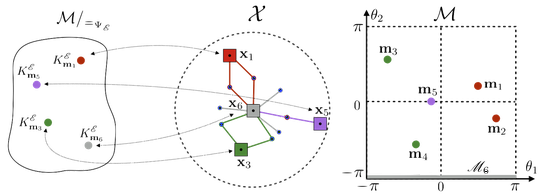

I chose to work on those topics through the sensorimotor contingencies theory, that explains that perceiving is analog to the discovering of stable relations (i.e. contingencies) linking motors and sensory events. Then characterizing these invariants (that is, determining precisely what they are and what are their properties) might help to investigate how internal representations built thanks to them could allow a robot to infer spatial structures without almost no a priori. For that purpose, we already tackled some of these aspects through two main contributions, mainly dealt with from a theoretical (mathematical) point of view:

- space dimension estimation

- structuration of the sensorimotor flow:

- internal representation of the body

- internal representation of the agent working space

- structuration of the agent actions through sensory prediction

- emergence of a subjective sensory continuity